本文主要介绍将NVMe盘通过RDMA映射到远端机器后,再在该机器上安装ZFS。

如何安装NVMe over RDMA,请参考上一篇文章:NVMe over RDMA的安装配置。

说明:本文所有命令执行的系统为debian。

安装ZFS

直接使用”apt install -y zfsutils-linux”安装ZFS,会出现问题,详细解决办法请参考本文后面的遇到的错误及解决办法。

可直接按如下步骤进行安装:1

2

31. apt-get install dpkg-dev linux-headers-$(uname -r) linux-image-amd64

2. apt-get install zfs-dkms zfsutils-linux

3. modprobe zfs

创建存储池

创建ZFS存储池(普通存储池,相当于RAID0,无冗余)

1.机器上要有相应的硬盘,本文以NVMe盘为例,nvme0n1和nvme1n1。

2.查看存储池状态:

1 | zpool status |

3.创建ZFS存储池,以nvme0n1和nvme1n1为例:

创建好后,用zpool status查看是如下结果,如遇到错误请参考后面的解决办法。1

2

3

4

5

6

7

8

9

10

11

12

13# zpool create mypool nvme0n1 nvme1n1

# zpool status

pool: mypool

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

mypool ONLINE 0 0 0

nvme0n1 ONLINE 0 0 0

nvme1n1 ONLINE 0 0 0

errors: No known data errors

使用ZFS创建这种简单的池意义不大,这相当于没有冗余(类似RAID0)

创建下一个存储池前,记得清理存储池1

zpool destroy mypool

一个简单的镜像池

还是使用nvme0n1和nvme1n1两个盘,创建一个简单的镜像池:1

2

3

4

5

6

7

8

9

10

11

12

13

14# zpool create mypool mirror nvme0n1 nvme1n1

# zpool status

pool: mypool

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

mypool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

nvme0n1 ONLINE 0 0 0

nvme1n1 ONLINE 0 0 0

errors: No known data errors

创建RAIDZ2的存储池

RAIDZ-2至少需要4块硬盘,RAIDZ-2能容忍最多两盘故障。

例子中使用4块NVMe盘:nvme0n1 nvme1n1 nvme2n1 nvme3n1。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16zpool create zfstest raidz2 nvme0n1 nvme1n1 nvme2n1 nvme3n1

root@cld-mon1-12002:~# zpool status

pool: zfstest

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

zfstest ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

nvme0n1 ONLINE 0 0 0

nvme1n1 ONLINE 0 0 0

nvme2n1 ONLINE 0 0 0

nvme3n1 ONLINE 0 0 0

errors: No known data errors

对存储池创建数据集(创建文件系统)

使用命令查看上面创建的zfstest池:1

2

3# zfs list

NAME USED AVAIL REFER MOUNTPOINT

zfstest 114K 2.80T 32.9K /zfstest

创建数据集test:1

2

3

4

5# zfs create zfstest/test

# zfs list

NAME USED AVAIL REFER MOUNTPOINT

zfstest 151K 2.80T 32.9K /zfstest

zfstest/test 32.9K 2.80T 32.9K /zfstest/test

销毁zfs数据集

销毁zfs数据集使用”zfs destroy”命令,下面的例子中把数据集tabriz销毁了:1

# zfs destroy tank/home/tabriz

注意:如果遇到要销毁的数据集正忙,无法umount,可以使用-f参数强制销毁。1

2

3

4# zfs destroy tank/home/ahrens

cannot unmount 'tank/home/ahrens': Device busy

# zfs destroy -f tank/home/ahrens

另外,我犯了一个错误,就是在zfstest/test/目录下执行“zfs destory zfstest/test”,然后一直报

“umount: /zfstest/test: target is busy.

cannot unmount ‘/zfstest/test’: umount failed”,加-f参数也不行,其实不在那个目录就好了。

FIO测试其性能

大文件单线程写

1 | root@XXX:/zfstest/test# fio --group_reporting --time_based --norandommap --name=big-file-single-write --directory=/zfstest/test --rw=write --bs=4M --size=10G --time_based --runtime=300 --numjobs=1 --output big-file-single-write.txt |

1 | root@XXX# fio --group_reporting --time_based --iodepth=32 --norandommap --name=big-file-single-write --directory=/zfstest/test --rw=write --bs=4M --size=10G --time_based --runtime=300 --numjobs=1 --output big-file-single-write.txt |

大文件并发写

1 | root@XXX:/zfstest/test# fio --group_reporting --time_based --iodepth=32 --norandommap --name=big-file-multi-write --directory=/zfstest/test/ --rw=write --bs=4M --size=10G --time_based --runtime=300 --numjobs=16 --output big-file-multi-write.txt |

遇到的错误及解决办法

使用zpool status命令,报“ZFS modules are not loaded”错误

1 | zpool status |

解决办法:使用命令”modprobe zfs”加载zfs模块,不过很可能会遇到下一个的问题,把下面的那个问题解决了,该问题也就解决了,详情看下个问题的解决办法

使用“modprobe zfs”报“Module zfs not found in directory”错误

1 | ~# modprobe zfs |

解决办法:需要先安装内核相关的头文件,然后在重新安装zfs相关组件,具体步骤如下:1

2

31. apt-get install dpkg-dev linux-headers-$(uname -r) linux-image-amd64

2. apt-get install zfs-dkms zfsutils-linux

3. modprobe zfs

这样,该问题解决,上一个问题也就解决了。1

2zpool status

no pools available

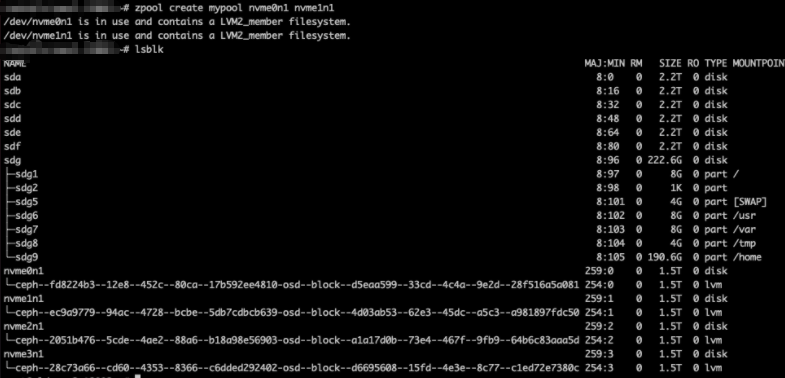

创建存储池遇到”xx is in use and contains a LVM2_member filesystem”错误

1 | # zpool create mypool nvme0n1 nvme1n1 |

解决办法:这是因为我原先使用该环境搭建了Ceph并且已经使用了那两个盘,清理掉相应的环境,并删除对应的LVM即可。删除LVM可以参考该文章关于LVM的概念及相关操作

删除卷组vg:1

for i in `pvs | grep ceph | awk '{ print $2 }'`; do vgremove -y $i; done

删除物理卷:1

for i in `pvs | grep dev | awk '{ print $1 }'`; do pvremove -y $i; done

cannot unmount ‘tank/home/ahrens’: Device busy

解决办法:

- 加 -f 参数

- 如果还不行看看是不是自己正在那个要umount的目录下,换到其他目录再执行umount即可。